#127

Trico on Aug. 27, 2017

— Rant mode on —

Let's talk a bit about human-machine interfaces in science fiction and why they're all crap.

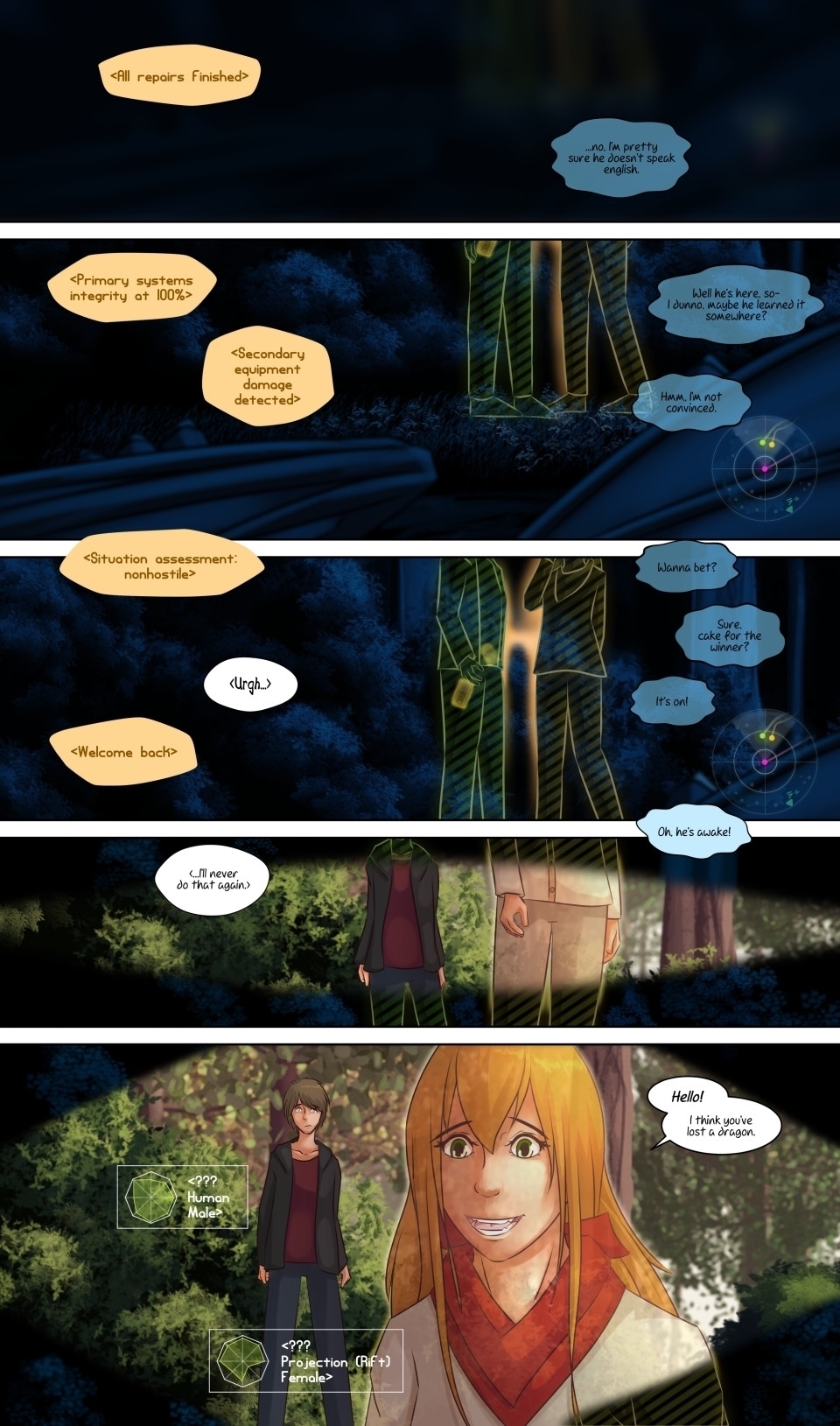

When visual-based media wants to depict futuristic systems, the go-to choice is some form of augmented reality head-up display. Maybe throw in some voice control or gestures and you have the super advanced interface trope for 95% of scifi.

From a pragmatic standpoint it's just inconsequent. The goal of a well designed interface should be to deliver a maximum of situational awareness in a minimum of time without being distractive. Head-up displays and similar technologies are visual based, which inherently slows things down. Even if perfectly designed, the brain has to interpret the shapes and colors first, adding a layer of abstraction between the information and the final understanding.

So if we already assume advanced systems, why not go one step further? Humans get the best reaction times when we rely on intuition and relexes, so relevant information should really be fed into these layers via brain interfaces. ‘Just knowing’ the positions of enemies for example is so much quicker than looking around for different colored blobs on a minimap. Similarily, computer input via thoughts is more efficient than talking with some speech interface.

And even another step further: Why not let the super advanced computer system take control of the host in critical situations? I'm sure that an artificial intelligence will be way better at predicting movements, dodging bullets and fighting bad guys. (In fact a general AI will be better at everything humans do, but that's a topic for another time…)

To me the way human-computer interaction is displayed in most scifi feels archaic compared to the actual possibilities technology can bring. It's like watching an old movie where a Palm PDA is shown off as greatest piece of technology of all time.

Unfortunately the above concepts are reaaaally hard to convey to an audience. There's no easy way to show a brain interface at work (except you take the Matrix approach and just have someone else tell the viewer what's happening). On the other hand it's easy to slap together a visual interface (at least for visual based media like movies, games and comics) and it works in getting your point accross, since viewers have been conditioned by decades of movies to associate these visual cues with advanced future stuff.

So I'm doing a mixed approach here. I like the idea of the brain interface option, but try to convey it through circumstance and visual cues. Also classic augmented reality can sometimes be useful for non-critical information (like in the panels - and I know I just bashed minimaps, but they have their place). All in all I hope to avoid the pitfalls of classic cyborg depictions in favor of a more sensible theory of human-machine interaction.

…

Oh, and I wanted to say that the Ironman interface really sucks from an user-experience standpoint. It's just awful. I mean, who decided that there should be flashy animations all over the field of vision? And why are there entire Wikipedia articles displayed? Nobody can concentrate on anything with this shit going on…

— Rant mode off —

Oh look, a new page!

thunderdavid at 8:07PM, Aug. 27, 2017

yea, that Ironman interface was way over the top. If it was me, I would became dizzy looking through that interface.